Identifying Temporal Correlations Between Natural Single-shot Videos and EEG Signals

Abstract

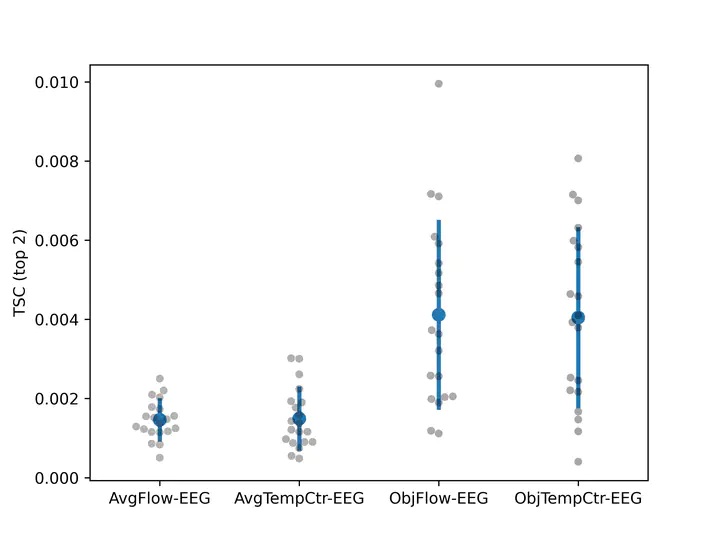

Electroencephalography (EEG) is a widely used technology for recording brain activity in brain-computer interface (BCI) research, where understanding the encoding-decoding relationship between stimuli and neural responses is a fundamental challenge. Recently, there is a growing interest in encoding-decoding natural stimuli in a single-trial setting, as opposed to traditional BCI literature where multi-trial presentations of synthetic stimuli are commonplace. While EEG responses to natural speech have been extensively studied, such stimulus-following EEG responses to natural video footage remain underexplored. We collect a new EEG dataset with subjects passively viewing a film clip and extract a few video features that have been found to be temporally correlated with EEG signals. However, our analysis reveals that these correlations are mainly driven by shot cuts in the video. To avoid the confounds related to shot cuts, we construct another EEG dataset with natural single-shot videos as stimuli and propose a new set of object-based features. We demonstrate that previous video features lack robustness in capturing the coupling with EEG signals in the absence of shot cuts, and that the proposed object-based features exhibit significantly higher correlations. Furthermore, we show that the correlations obtained with these proposed features are not dominantly driven by eye movements. Additionally, we quantitatively verify the superiority of the proposed features in a match-mismatch task. Finally, we evaluate to what extent these proposed features explain the variance in coherent stimulus responses across subjects. This work provides valuable insights into feature design for video-EEG analysis and paves the way for applications such as visual attention decoding.